Nov 13, 2023

data.world Team

A new benchmark, published by the authors, demonstrates further enhancements to response accuracy when using knowledge graphs with LLMs. Click here to learn more about the new benchmark.

Large Language Models (LLMs) present enterprises with exciting new opportunities for leveraging their data, from improving processes to creating entirely new products and services. But coupled with excitement for the transformative power of LLMs, there are also looming concerns. Chief among those concerns is the accuracy of LLMs in production. Initial evaluations have found that LLMs will surface false information backed by fabricated citations as fact – also known as “hallucinations.” This phenomenon led McKinsey to cite “inaccuracy” as the top risk associated with generative AI.

Download this first-of-its-kind LLM benchmark report to discover how a Knowledge Graph can improve LLM accuracy by 300% and give your enterprise a competitive edge.

Some context around hallucinations: LLMs function as statistical pattern-matching systems. They analyze vast quantities of data to generate responses based on statistical likelihood – not fact. Therefore, the smaller the dataset – say your organization’s internal data rather than the open internet – the less likely it is that the responses are accurate.

However, research is underway to address this challenge. A growing number of experts from across the industry, including academia, database companies, and industry analyst firms, like Gartner, point to Knowledge Graphs as a means for improving LLM response accuracy.

To evaluate this claim, a new benchmark from Juan Sequeda Ph.D., Dean Allemang Ph.D., and Bryon Jacob, CTO of data.world, examines the positive effects that a Knowledge Graph can have on LLM response accuracy in the enterprise. They compared LLM-generated answers to answers backed by a Knowledge Graph, via data stored in a SQL database. The benchmark found evidence of a significant improvement in the accuracy of responses when backed by a Knowledge Graph, in every tested category.

Specifically, top-line findings include:

A Knowledge Graph improved LLM response accuracy by 3x across 43 business questions.

LLMs – without the support of a Knowledge Graph – fail to accurately answer “schema-intensive” questions (questions often focused on metrics & KPIs and strategic planning). LLMs returned accurate responses 0% of the time.

A Knowledge Graph significantly improves the accuracy of LLM responses – even schema-intensive questions.

A comparison: Answering complex business questions

The benchmark uses the enterprise SQL schema from the OMG Property and Casualty Data Model in the insurance domain. The OMG specification addresses the data management needs of the Property and Casualty (P&C) insurance community. Researchers measured accuracy with the metric of Execution Accuracy (EA) from the Yale Spider benchmark.

Against this metric, the benchmark compared the accuracy of responses to 43 questions of varying complexity, ranging from simple operational reporting to key performance indicators (KPIs).

The benchmark applies two complexity vectors: question complexity and schema complexity.

Question complexity: Refers to the amount of aggregations, mathematical functions, and the number of table joins required to produce a response.

Schema complexity: Refers to the amount of different data tables that must be queried in order to produce a response.

These vectors create four categories of question complexity related to typical analysis that occurs in an enterprise environment:

Day-to-day analytics: These are questions of low-question and low-schema complexity. Answering these questions requires querying three columns and one table of data.

Example: "Return all the claims we have by claim number, open date, and close date."

Operational analytics: These are questions of high-question and low-schema complexity. Answering these questions requires aggregation and querying four tables of data.

Example: "What is the average time to settle a claim policy?"

Metrics & KPIs: These are questions of low-question and high-schema complexity. Answering these questions requires querying three columns and six tables of data.

Example: "What are the loss payment, loss reserve, expense reserve amount by claim number?"

Strategic planning: These are questions of high-question and high-schema complexity. Answering these questions means using aggregation, math, and querying nine tables of data.

Example: "What is the total loss of each policy where loss is the sum of loss payment, loss revenue, expense payment, and expense reserve amount?”

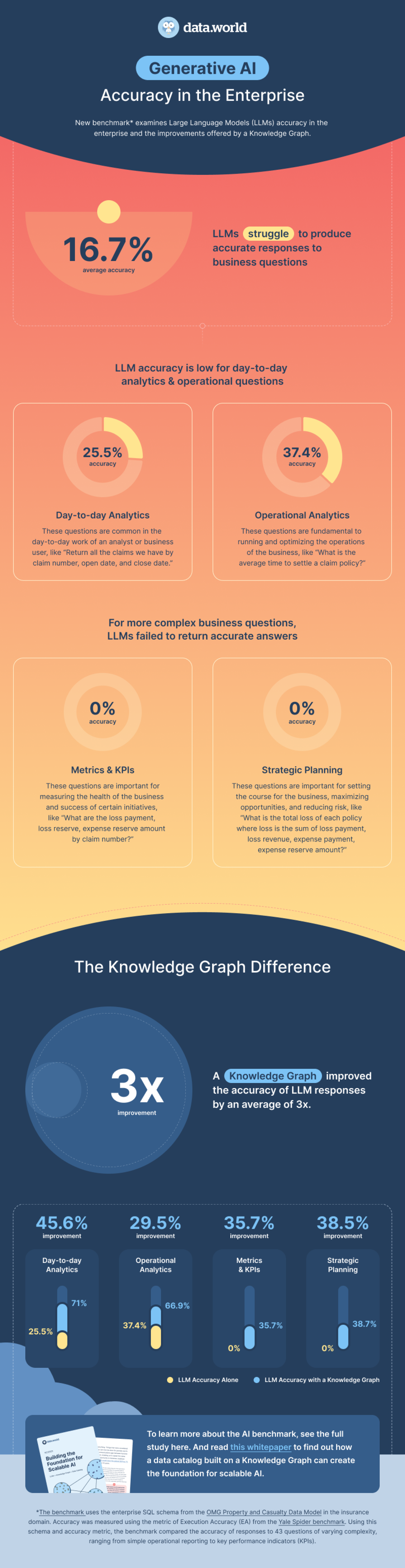

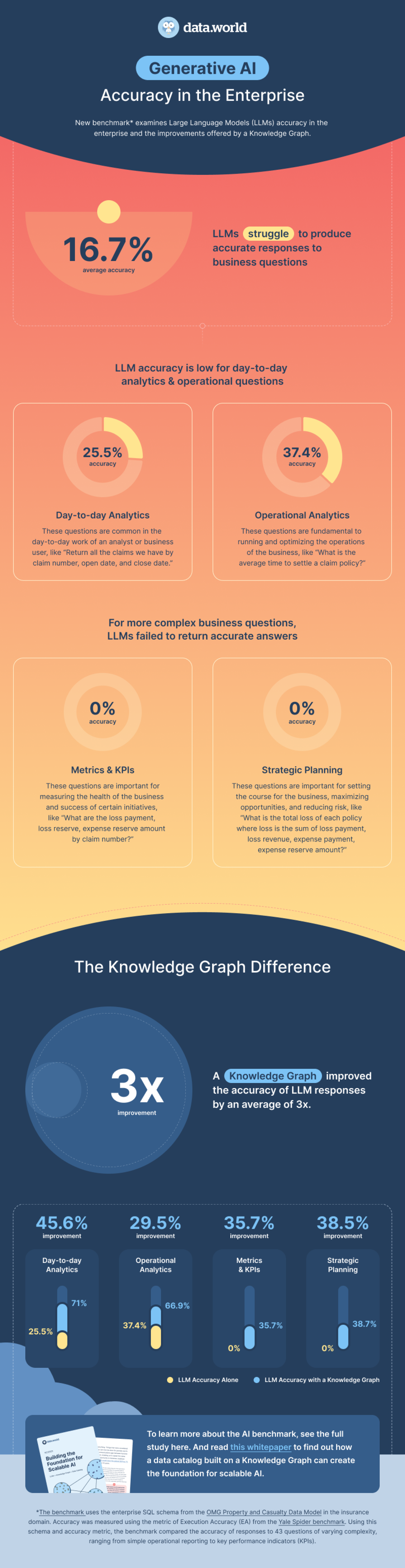

LLMs struggle to answer business questions

When scored on 43 different business questions across the four categories of complexity, the LLM struggled to produce accurate answers. The average accuracy of the answer across all questions came in at 16.7%.

Overall accuracy was poor, but the LLM struggled most significantly with high-schema complexity questions. For those categories with high-schema complexity – questions related to metrics, KPIs and strategic planning, the LLM failed to return an accurate answer, scoring 0% in both categories.

Questions with high-schema complexity represent the upper end of questions that analysts and executives might ask of their data. Typically, these questions require an expert analyst to answer – someone proficient in SQL with a deep understanding of the organization’s data.

The average score for each category of complexity can be found below:

Day-to-day analytics: 25.5% accuracy

Operational analytics: 37.4% accuracy

Metrics & KPIs: 0% accuracy

Strategic planning: 0% accuracy

The Knowledge Graph difference

Knowledge Graphs map data to meaning, capturing both semantics and context. Rigid relational data moves into a flexible graph structure, enabling a richer understanding of the connections between data, people, processes, and decisions. The flexible format provides the context that LLMs need to more accurately answer complex questions across both aforementioned vectors of question and schema complexity.

The benchmark showed an average 3x improvement in response accuracy and marked improvement in each category – even the high-schema complexity questions that stumped the LLM alone.

The improvement added by the Knowledge Graph can be seen here:

Day-to-day analytics: From 25.5% accuracy → 71% accuracy with Knowledge Graph

Operational analytics: From 37.4% accuracy → 66.9% accuracy with Knowledge Graph an improvement of 2X

Metrics & KPIs: From 0% accuracy → 35.7% accuracy with Knowledge Graph

Strategic planning: From 0% accuracy → 38.7% accuracy with Knowledge Graph

The future of AI-ready data

Knowledge Graphs remove a critical barrier standing in the way of enterprises unlocking new capabilities with AI. This benchmark underscores the significant impact a Knowledge Graph can have on LLM accuracy in enterprise settings.

The implications are enormous for businesses: Making LLMs a viable means for making data-driven decision-making accessible to more people (regardless of technical know-how), enabling faster time-to-value with data and analytics, and surfacing new ways to use data to drive ROI, just to name a few.

This is the first benchmark investigating how Knowledge Graph-based approaches can strengthen LLM accuracy and impact in the enterprise. But these are still early days. LLMs will continue to become more accurate and Knowledge Graph-techniques will continue to refine LLM response accuracy. The authors plan to publish additional benchmarks documenting the effects of these improvements in the future.

Even as LLMs and Knowledge Graph techniques improve, no system is correct 100% of the time. The ability to audit responses and trace the path of LLM response generation will be critical to accountability and trust. By leveraging a data catalog built on a Knowledge Graph, like the data.world Data Catalog Platform, enterprises can bring explainability to LLMs, effectively opening up the AI “black box” and enabling the LLMs to “show their work.”

Additionally, a data catalog built on a Knowledge Graph will enable enterprises to govern the data, metadata, queries, and responses being used and generated by the LLM. Proper governance is critical for ensuring that sensitive or proprietary data is protected and that the responses are valid in the context of the organization and its internal rules.

With a well-managed data catalog built on Knowledge Graph, enterprises can create AI-ready data and build the foundation for success with generative AI – improving accuracy, enabling explainability, and applying governance.

Learn how your organization can build AI-powered applications that generate accurate, explainable, and governed responses.

To learn more about the AI benchmark, see the full study here.

A new benchmark, published by the authors, demonstrates further enhancements to response accuracy when using knowledge graphs with LLMs. Click here to learn more about the new benchmark.

Large Language Models (LLMs) present enterprises with exciting new opportunities for leveraging their data, from improving processes to creating entirely new products and services. But coupled with excitement for the transformative power of LLMs, there are also looming concerns. Chief among those concerns is the accuracy of LLMs in production. Initial evaluations have found that LLMs will surface false information backed by fabricated citations as fact – also known as “hallucinations.” This phenomenon led McKinsey to cite “inaccuracy” as the top risk associated with generative AI.

Download this first-of-its-kind LLM benchmark report to discover how a Knowledge Graph can improve LLM accuracy by 300% and give your enterprise a competitive edge.

Some context around hallucinations: LLMs function as statistical pattern-matching systems. They analyze vast quantities of data to generate responses based on statistical likelihood – not fact. Therefore, the smaller the dataset – say your organization’s internal data rather than the open internet – the less likely it is that the responses are accurate.

However, research is underway to address this challenge. A growing number of experts from across the industry, including academia, database companies, and industry analyst firms, like Gartner, point to Knowledge Graphs as a means for improving LLM response accuracy.

To evaluate this claim, a new benchmark from Juan Sequeda Ph.D., Dean Allemang Ph.D., and Bryon Jacob, CTO of data.world, examines the positive effects that a Knowledge Graph can have on LLM response accuracy in the enterprise. They compared LLM-generated answers to answers backed by a Knowledge Graph, via data stored in a SQL database. The benchmark found evidence of a significant improvement in the accuracy of responses when backed by a Knowledge Graph, in every tested category.

Specifically, top-line findings include:

A Knowledge Graph improved LLM response accuracy by 3x across 43 business questions.

LLMs – without the support of a Knowledge Graph – fail to accurately answer “schema-intensive” questions (questions often focused on metrics & KPIs and strategic planning). LLMs returned accurate responses 0% of the time.

A Knowledge Graph significantly improves the accuracy of LLM responses – even schema-intensive questions.

A comparison: Answering complex business questions

The benchmark uses the enterprise SQL schema from the OMG Property and Casualty Data Model in the insurance domain. The OMG specification addresses the data management needs of the Property and Casualty (P&C) insurance community. Researchers measured accuracy with the metric of Execution Accuracy (EA) from the Yale Spider benchmark.

Against this metric, the benchmark compared the accuracy of responses to 43 questions of varying complexity, ranging from simple operational reporting to key performance indicators (KPIs).

The benchmark applies two complexity vectors: question complexity and schema complexity.

Question complexity: Refers to the amount of aggregations, mathematical functions, and the number of table joins required to produce a response.

Schema complexity: Refers to the amount of different data tables that must be queried in order to produce a response.

These vectors create four categories of question complexity related to typical analysis that occurs in an enterprise environment:

Day-to-day analytics: These are questions of low-question and low-schema complexity. Answering these questions requires querying three columns and one table of data.

Example: "Return all the claims we have by claim number, open date, and close date."

Operational analytics: These are questions of high-question and low-schema complexity. Answering these questions requires aggregation and querying four tables of data.

Example: "What is the average time to settle a claim policy?"

Metrics & KPIs: These are questions of low-question and high-schema complexity. Answering these questions requires querying three columns and six tables of data.

Example: "What are the loss payment, loss reserve, expense reserve amount by claim number?"

Strategic planning: These are questions of high-question and high-schema complexity. Answering these questions means using aggregation, math, and querying nine tables of data.

Example: "What is the total loss of each policy where loss is the sum of loss payment, loss revenue, expense payment, and expense reserve amount?”

LLMs struggle to answer business questions

When scored on 43 different business questions across the four categories of complexity, the LLM struggled to produce accurate answers. The average accuracy of the answer across all questions came in at 16.7%.

Overall accuracy was poor, but the LLM struggled most significantly with high-schema complexity questions. For those categories with high-schema complexity – questions related to metrics, KPIs and strategic planning, the LLM failed to return an accurate answer, scoring 0% in both categories.

Questions with high-schema complexity represent the upper end of questions that analysts and executives might ask of their data. Typically, these questions require an expert analyst to answer – someone proficient in SQL with a deep understanding of the organization’s data.

The average score for each category of complexity can be found below:

Day-to-day analytics: 25.5% accuracy

Operational analytics: 37.4% accuracy

Metrics & KPIs: 0% accuracy

Strategic planning: 0% accuracy

The Knowledge Graph difference

Knowledge Graphs map data to meaning, capturing both semantics and context. Rigid relational data moves into a flexible graph structure, enabling a richer understanding of the connections between data, people, processes, and decisions. The flexible format provides the context that LLMs need to more accurately answer complex questions across both aforementioned vectors of question and schema complexity.

The benchmark showed an average 3x improvement in response accuracy and marked improvement in each category – even the high-schema complexity questions that stumped the LLM alone.

The improvement added by the Knowledge Graph can be seen here:

Day-to-day analytics: From 25.5% accuracy → 71% accuracy with Knowledge Graph

Operational analytics: From 37.4% accuracy → 66.9% accuracy with Knowledge Graph an improvement of 2X

Metrics & KPIs: From 0% accuracy → 35.7% accuracy with Knowledge Graph

Strategic planning: From 0% accuracy → 38.7% accuracy with Knowledge Graph

The future of AI-ready data

Knowledge Graphs remove a critical barrier standing in the way of enterprises unlocking new capabilities with AI. This benchmark underscores the significant impact a Knowledge Graph can have on LLM accuracy in enterprise settings.

The implications are enormous for businesses: Making LLMs a viable means for making data-driven decision-making accessible to more people (regardless of technical know-how), enabling faster time-to-value with data and analytics, and surfacing new ways to use data to drive ROI, just to name a few.

This is the first benchmark investigating how Knowledge Graph-based approaches can strengthen LLM accuracy and impact in the enterprise. But these are still early days. LLMs will continue to become more accurate and Knowledge Graph-techniques will continue to refine LLM response accuracy. The authors plan to publish additional benchmarks documenting the effects of these improvements in the future.

Even as LLMs and Knowledge Graph techniques improve, no system is correct 100% of the time. The ability to audit responses and trace the path of LLM response generation will be critical to accountability and trust. By leveraging a data catalog built on a Knowledge Graph, like the data.world Data Catalog Platform, enterprises can bring explainability to LLMs, effectively opening up the AI “black box” and enabling the LLMs to “show their work.”

Additionally, a data catalog built on a Knowledge Graph will enable enterprises to govern the data, metadata, queries, and responses being used and generated by the LLM. Proper governance is critical for ensuring that sensitive or proprietary data is protected and that the responses are valid in the context of the organization and its internal rules.

With a well-managed data catalog built on Knowledge Graph, enterprises can create AI-ready data and build the foundation for success with generative AI – improving accuracy, enabling explainability, and applying governance.

Learn how your organization can build AI-powered applications that generate accurate, explainable, and governed responses.

To learn more about the AI benchmark, see the full study here.

Get the best practices, insights, upcoming events & learn about data.world products.