Mar 02, 2023

Brett Hurt

CEO & Co-Founder

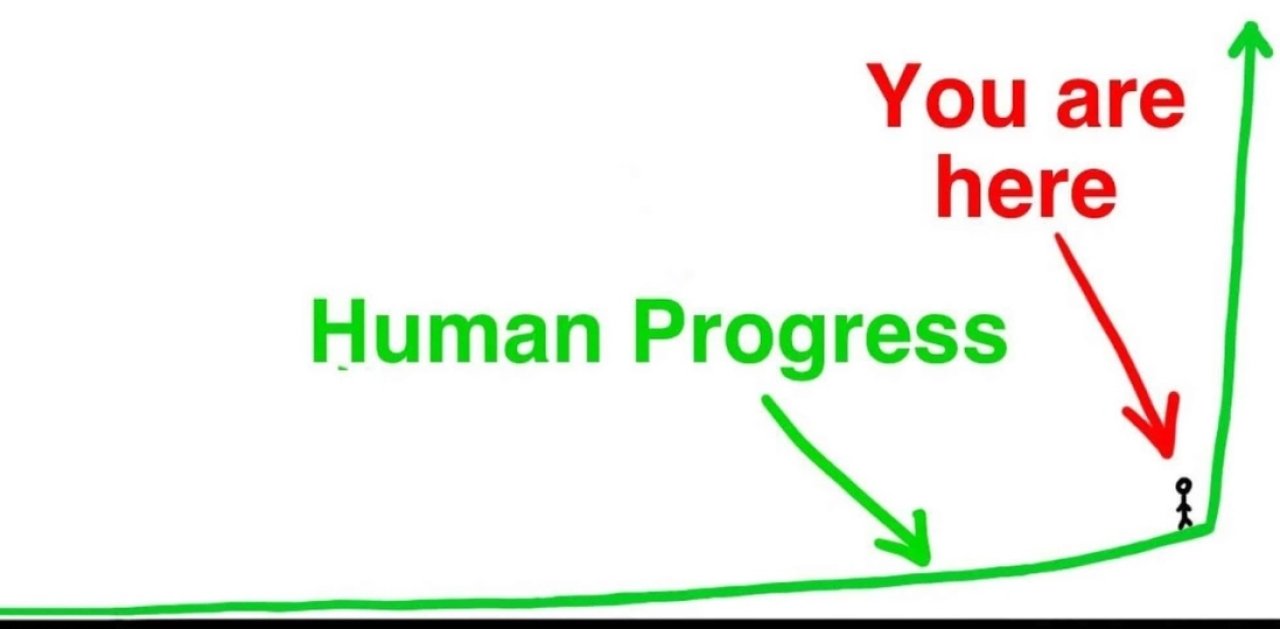

Sometimes, technology evolves very quickly, as I discussed in my last essay on the past half century’s three “surges” of data. But sometimes, tech straps on the afterburners — as it is surely doing today.

Welcome to data’s “Fourth Surge,” which in January I foreshadowed as the focus of this post. In fact, since concluding that essay with a promise to follow with an explanation of the Fourth Surge that surge has turned into a torrent.

It’s hardly news that OpenAI’s ChatGPT is the churning white water foam at the head of the torrent, thrusting Large Language Models (LLMs), sometimes known as “generative AI”, into the mainstream. But so much has come behind, carried in the tow of its crest. No doubt, there’s plenty of flotsam and jetsam borne along in this deluge of news, information, and hype. None other than OpenAI’s CEO himself, Sam Altman, has advised that we must move slowly and deliberately, and take some of the claims made on its behalf with large grains of salt. The scramble to make ChatGPT pass the so-called Turing Test proposed in 1950 by famed computer scientist Alan Turing – whether a machine can have a conversation with a human without the human knowing it’s a machine – has led to questionable exercises in digital karaoke.

“The growth of misinformation is a risk,” wrote technology futurist Azeem Azhar. “But perhaps more pernicious is simply that uncontrolled AI-generated content will flood our attention circuitry, weakening our ability to critically assess what we read.”

Another sage on the subject is Irish data scientist Daragh O Brien, who colorfully warns of the Ensh*ttening of Knowledge, which is a read everyone in our beloved data space should ponder!

But the head-turning strides are undeniable. We announced our new AI Lab last week, on February 22, a first for our industry. Our AI Lab is led by Juan Sequeda, principal scientist at data.world and co-author of the book Designing and Building Enterprise Knowledge Graphs. This is our own “afterburner” to further the evolution toward the full promise of data in enterprises – to become a brain trust, a collaborative network of cognition across and between teams, partners, and customers.

It’s an incredibly exciting time in this arena with our customers. We’re already deep in collaboration with WPP, the well-known multinational advertising conglomerate, to use OpenAI’s GPT-3 with the data.world Data Catalog to make datasets far more discoverable, recommend use cases for datasets, and automatically enrich data with time-saving suggestions for descriptive metadata.

Elsewhere, we are watching the giants fight each other, much like humanity did in the epic movies Pacific Rim and Clash of the Titans. OpenAI and their partner Microsoft have begun unveiling the next iteration, named GPT-4. At the center of this richly baked cake of technology is the rearchitected-from-the-crypt search engine Bing, long given up for lost. A few weeks ago, Bing closed out 2022 with just 3.19% of global search engine market share. Goliath Google had 91.88%. Given that, this marriage between OpenAI and Microsoft, with Microsoft’s initial $1 billion investment followed by its most recent $10 billion oversize investment, is so profound that it invites comparison to such inflection points as the invention of the internet itself. This is big and I’ve more on this below.

But first recall that OpenAI’s ChatGPT, which has set a record as the fastest app to reach 100 million active users, is a proof point. $10 billion is a lot of money. However, one way to think about this is with Microsoft CEO Satya Nadella’s stunning calculation. He explained that for every percent of search engine market share they peel away, Microsoft nets another $2 billion! Not to mention the fact that they’ll be integrating OpenAI’s GPT-4 and its future versions into all of the Microsoft Office products, leading to even more potential for Microsoft globally as it continues its battle with Google Workspace. To be sure, this is a comparison to the early internet, still cumbersome, and lacking an interface architecture akin to the World Wide Web. But epoch-making nonetheless.

AI is a very rich vein. What Google announced with DeepMind’s AlphaFold, the protein-folding breakthrough in 2022, was extremely impressive for bioscientists – and should be for all humanity if you think about its far-reaching implications.

Google has reacted to OpenAI with extreme urgency, announcing its own AI project called Bard, which has so far failed to impress – shades of Clayton Christiansen’s famous insight on the “Innovators Dilemma.” Longstanding paradigms – led by the $695 billion digital advertising sector essentially created by Google – are about to shift as we’ve never seen. If you’re a practitioner in the $75 billion a year search engine optimization (SEO) market… well, change is nigh.

However rough around the edges, we are surely at a dramatic inflection point.

This year, I will be writing much more on this technology, its promises, and its perils. I share OpenAI CEO Altman’s caution and call for patience. I also agree with the way he put it recently on the Hard Fork podcast: “... I believe this is going to change many – maybe the great majority of – aspects of society…”

Change wrought by AI is coming in spades. Today, however, I want to focus on the backstory. Let’s peer around the corner – not just at OpenAI, but also at this enabling Fourth Surge of data, driven by what I describe as the “Double Helix of Data” — the combinatorial power of Data Discovery and Agile Data Governance that is powered by a knowledge graph.

You can’t understand the 15th century Age of Exploration without understanding the invention of the magnetic compass. You can’t understand the advent of the Industrial Revolution without grasping the role of ball bearings. And you certainly can't fathom the impact of today's computer-centric society without understanding how Texans Jack Kilby and Robert Noyce invented the integrated circuit in 1959.

You can’t understand the Fourth Surge without understanding the knowledge graph.

To steal a phrase from an industry colleague, the data scientist Seth Earley, the scaffolding for building of knowledge from data, datasets, schema and data streams is in the knowledge graph. The knowledge graph is the definition of “is-ness”. This original phrase captures so much. For the knowledge graph is what enables organizations and teams to look at their diverse data assets and ask the question, “What is this?”, and get the answer. Which is that it is all your data assets in relationships related to one another and therefore can be choreographed together, enabling the real meaning of becoming “data-driven.”

The knowledge graph is driving the Fourth Surge as a breakthrough enabling technology for both AI and the “Double Helix of Data”. This Fourth Surge driving the torrent of change with its AI afterburners is in many ways analogous to the genetic revolution, hence my term, the “Double Helix of Data”. The original DNA revolution is really a story of two breakthroughs – first the discovery, then the afterburner.

The "discovery" came in 1953, the code of biology’s DNA double helix cracked by scientists Rosalind Franklin, James Watson, and Francis Crick. The “afterburner” came two decades later; this was the 1973 switch of DNA from one bacteria into another by biochemists Herbert Boyer and Stanley Cohen – what we today called genetic engineering.

In parallel to this work and discovery of DNA, great strides were made in medicine and biology. We created a vaccine for polio. Cardiologists found ways to block blood flows, enabling implantation of a new device called the pacemaker – just the beginning of our merger with machines.

The 1950s were also when we developed nuclear magnetic resonance, the forerunner of the MRIs now routine in every hospital. Biologists made new discoveries in the way proteins and amino acids work, and in the 1960s science revealed much in the anatomy of the brain, including the relationship between the frontal cortex and the limbic system.

I mention these strides in biology and the physical sciences not to burden you with a history of abstract but applied science. Rather, I mention this amazing history because its more familiar arc illustrates the parallel evolution in the digital world, and the comparably profound strides in the management and use of data. Of course, the tools are many, including cloud technologies and machine learning, even blockchain. But the cognition at the center of it all is and will be enabled by data catalogs and knowledge graphs – the enabling technologies, for example, of Facebook, Google, Amazon, and other titans of Big Tech.

Just as the discovery of DNA’s double helix, and subsequently the means to engineer it, revolutionized life sciences, the Double Helix of Data is of similar scope. This equivalent historic scope is the innovation of Data Discovery and Agile Data Governance.

The faster we master and democratize these tools, the faster we’ll master this torrent upon us.

LinkedIn Co-founder and VC extraordinaire Reid Hoffman captured it recently: “we are ‘Homo techne’” – humans as toolmakers and tool users. “The story of humanity is the story of technology,” Hoffman wrote. That story is about to open upon its biggest and most dramatic chapter yet.

In this age of Hoffman’s “Homo techne”, our tools include the data catalog and the knowledge graph, which can be compared to the nervous system and brains of the organism that is enterprise data. I don’t make this analogy lightly. For just as a genetic mutation among Homo sapiens some 50,000 years ago accelerated the development of our nervous system and brains to enable language, grammar, and, in turn, true cognition, these recent digital tools are what convert the growing but disparate silos of data that underlie our age into digital cognition.

But we haven’t stopped there. Our data catalog powered by the knowledge graph is not just the toolset of digital cognition and grammar. No, these are tools specifically for universal grammar. There are other data tools in the marketplace, but most are also used to lock down your data itself, to thwart compatibility with other applications. As my colleague and data.world co-founder and CTO Bryon Jacob wrote recently, “vendor lock-in” is a scourge on our industry – the equivalent by an order of magnitude of blocking your ability to share your iPhone files on a PC or syncing your iPad with a Garmin smartwatch.

Not here at data.world.

It hardly needs to be said that in recent decades, we’ve become very good at creating data. As former Google CEO Eric Schmidt famously calculated back in 2010, all the information we created from the dawn of civilization until 2003 added up to something like five exabytes of data – the equivalent of 1.2 billion hours of high definition video streamed at 4 Mbps. Now, we create that much data every two days, or less. But bigger data haystacks, as wonderful as they might be, don’t make it easier to find the informational needle.

Enter Data Discovery, the suite of tools we have integrated with our data catalog, driven by our knowledge graph, which is all about enabling the aspiring masters of data to precisely identify, target, combine, and make usable their growing oceans of valuable, but siloed, data. This is how enterprises move beyond the phrase “data-driven” to actually become so, and to know and broadly see the full spectrum of the data asset they already have.

But revealing it is only half the challenge, as was the case with that equivalent discovery in 1953 of biology’s double helix. As mentioned, that discovery allowed us to identify inheritable diseases and predict them. It illuminated our origins and ancestors, and it gave us a new understanding of how genetic mutations occurred. And it certainly helped us challenge racial prejudices with science – we are all identical in our makeup regardless of pigmentation.

Our genetic uniformity is a fascinating subject in its own right – the least diversity among virtually all mammals – and is explained by researchers as a result of Homo sapiens population falling at some point to a breeding population of fewer than 10,000. Ponder for a moment the idea that all 7.9 billion of us are descended from a group of folks who could barely fill a medium-sized university.

This is all extraordinary illumination, but the transformative achievement for humanity was when the discovery of DNA was completed in 1973 with the understanding of how to move genes around – the making of the agile double helix. All manner of life-saving and enhancing innovations have ensued since. We use genetically modified E. coli to create synthetic insulin for diabetics, and genetically modified baker’s yeast to produce hepatitis B vaccine. We use genetically modified mammal cells to make blood factor 8 for hemophiliacs and the tissue plasminogen activator – known at tPA – for heart attack victims. Since 2013, scientists have been using the geneticist’s “scissors,” the gene-editing technique CRISPR, to improve cancer treatment, and it may well lead to a cure. The mRNA vaccines that have short circuited the COVID-19 pandemic too are borne along by this research.

The scope and scale of this profound leap in our scientific standing is my analogy to the quantum leap that is yielded by Agile Data Governance when combined with Data Discovery – together forming the “Double Helix of Data.”

Data discovery is the suite of technologies that allows precise locating, mapping, and correlation of data assets. Before the combination of the data catalog powered by a knowledge graph allowed us to do this, data resources were often like the internet before the invention of the first search engine. And we’ve refined these techniques with supporting tools, such as our Eureka Explorer, that leverage the power of the knowledge graph to map the entire life cycle of data – data lineage as we call it. This allows us to see where data came from, where and how it was used, and its relationship to the relevant metadata, without which it is often lost.

Agile Data Governance, meanwhile, is the inversion of rigid hierarchies in data management, creating “trust at scale,” the building of communities around data. Akin to the “scissors” of CRISPR, it is a revolution within a revolution. As I discussed in my earlier essay, this concept, developed by Jon Loyens, my co-founder and our Chief Product Officer, is inspired by the Agile Software Movement of two decades ago. It’s hard to exaggerate the ways the bottom-up ideas profoundly remade the top-down software landscape.

In this account of the transformative power of these technologies, author, data pioneer, and data.world Advisory Board member Theresa Kushner quotes a company auditor on how the approaches of the Agile Software Movement came to be used in data governance: “They are helping us make sense of complex and large datasets and identifying new opportunities to help us improve our decision making. Last month when we thought we had a data breach and we didn’t know where to look, we went to the data analyst community, and it only took 15 minutes to pinpoint the problem and resolve it.”

Header Image: By Natali_Mis

Agile data governance is all about access and empowerment, but also the right level of protection for one of an organization’s most valuable assets: its data. Getting this balance right is challenging but well worth the endeavor. All companies want their team members to have access to the collective braintrust that enables them to get their jobs done much more efficiently and effectively. No company wants their data assets stolen or squandered.

“We are as gods and might as well get good at it,” wrote polymath Stewart Brand in the introduction of the Whole Earth Catalog, upon launch more than half a century ago. I think of this quote often. For his point in 1968 is just as valid in 2023. We are not passengers on Planet Earth. Rather, we are the pilots.

The Fourth Surge is our “star dust”, the ingredients of our fast expanding digital cosmos. Our essential navigation tool – our astrolabe, compass, and GPS – is the Double Helix of Data.

At data.world, we are completely focused on this evolution and believe that knowledge graphs will redefine the entire category of data catalogs over the next few years. AI applications, famous and otherwise, are what we’re seeing debated right now. But we should also be looking behind the curtain and understanding the ultimate afterburners behind this technology – what we call knowledge graphs and the Double Helix of Data: Data Discovery and Agile Data Governance.

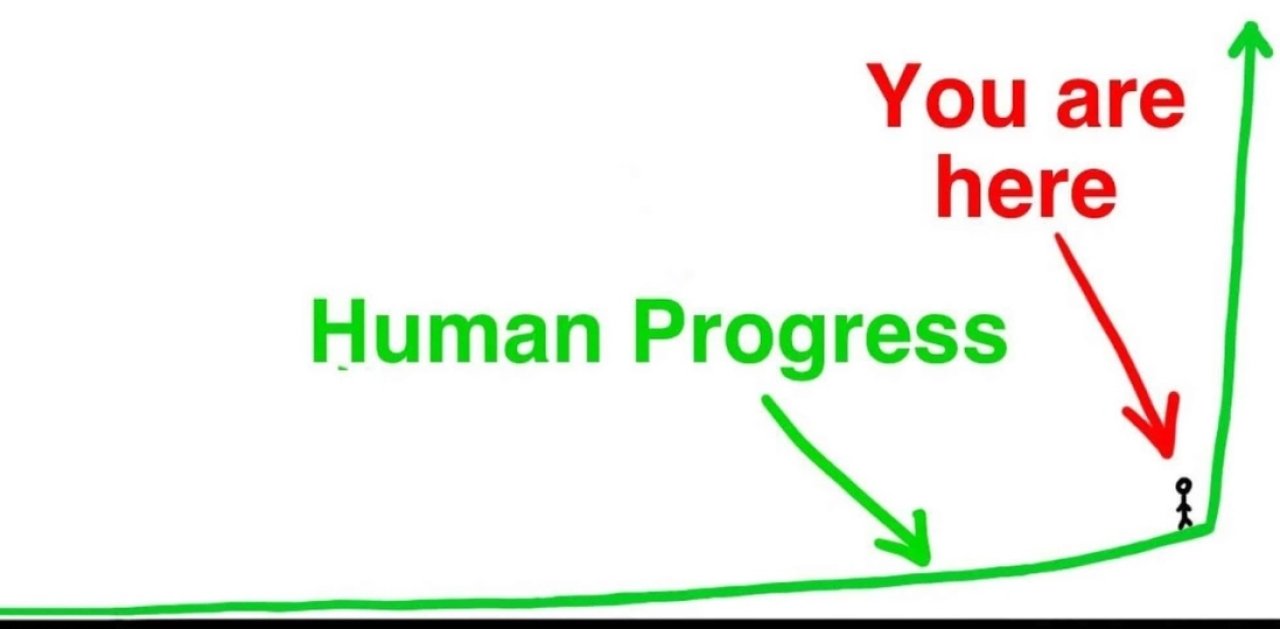

Sometimes, technology evolves very quickly, as I discussed in my last essay on the past half century’s three “surges” of data. But sometimes, tech straps on the afterburners — as it is surely doing today.

Welcome to data’s “Fourth Surge,” which in January I foreshadowed as the focus of this post. In fact, since concluding that essay with a promise to follow with an explanation of the Fourth Surge that surge has turned into a torrent.

It’s hardly news that OpenAI’s ChatGPT is the churning white water foam at the head of the torrent, thrusting Large Language Models (LLMs), sometimes known as “generative AI”, into the mainstream. But so much has come behind, carried in the tow of its crest. No doubt, there’s plenty of flotsam and jetsam borne along in this deluge of news, information, and hype. None other than OpenAI’s CEO himself, Sam Altman, has advised that we must move slowly and deliberately, and take some of the claims made on its behalf with large grains of salt. The scramble to make ChatGPT pass the so-called Turing Test proposed in 1950 by famed computer scientist Alan Turing – whether a machine can have a conversation with a human without the human knowing it’s a machine – has led to questionable exercises in digital karaoke.

“The growth of misinformation is a risk,” wrote technology futurist Azeem Azhar. “But perhaps more pernicious is simply that uncontrolled AI-generated content will flood our attention circuitry, weakening our ability to critically assess what we read.”

Another sage on the subject is Irish data scientist Daragh O Brien, who colorfully warns of the Ensh*ttening of Knowledge, which is a read everyone in our beloved data space should ponder!

But the head-turning strides are undeniable. We announced our new AI Lab last week, on February 22, a first for our industry. Our AI Lab is led by Juan Sequeda, principal scientist at data.world and co-author of the book Designing and Building Enterprise Knowledge Graphs. This is our own “afterburner” to further the evolution toward the full promise of data in enterprises – to become a brain trust, a collaborative network of cognition across and between teams, partners, and customers.

It’s an incredibly exciting time in this arena with our customers. We’re already deep in collaboration with WPP, the well-known multinational advertising conglomerate, to use OpenAI’s GPT-3 with the data.world Data Catalog to make datasets far more discoverable, recommend use cases for datasets, and automatically enrich data with time-saving suggestions for descriptive metadata.

Elsewhere, we are watching the giants fight each other, much like humanity did in the epic movies Pacific Rim and Clash of the Titans. OpenAI and their partner Microsoft have begun unveiling the next iteration, named GPT-4. At the center of this richly baked cake of technology is the rearchitected-from-the-crypt search engine Bing, long given up for lost. A few weeks ago, Bing closed out 2022 with just 3.19% of global search engine market share. Goliath Google had 91.88%. Given that, this marriage between OpenAI and Microsoft, with Microsoft’s initial $1 billion investment followed by its most recent $10 billion oversize investment, is so profound that it invites comparison to such inflection points as the invention of the internet itself. This is big and I’ve more on this below.

But first recall that OpenAI’s ChatGPT, which has set a record as the fastest app to reach 100 million active users, is a proof point. $10 billion is a lot of money. However, one way to think about this is with Microsoft CEO Satya Nadella’s stunning calculation. He explained that for every percent of search engine market share they peel away, Microsoft nets another $2 billion! Not to mention the fact that they’ll be integrating OpenAI’s GPT-4 and its future versions into all of the Microsoft Office products, leading to even more potential for Microsoft globally as it continues its battle with Google Workspace. To be sure, this is a comparison to the early internet, still cumbersome, and lacking an interface architecture akin to the World Wide Web. But epoch-making nonetheless.

AI is a very rich vein. What Google announced with DeepMind’s AlphaFold, the protein-folding breakthrough in 2022, was extremely impressive for bioscientists – and should be for all humanity if you think about its far-reaching implications.

Google has reacted to OpenAI with extreme urgency, announcing its own AI project called Bard, which has so far failed to impress – shades of Clayton Christiansen’s famous insight on the “Innovators Dilemma.” Longstanding paradigms – led by the $695 billion digital advertising sector essentially created by Google – are about to shift as we’ve never seen. If you’re a practitioner in the $75 billion a year search engine optimization (SEO) market… well, change is nigh.

However rough around the edges, we are surely at a dramatic inflection point.

This year, I will be writing much more on this technology, its promises, and its perils. I share OpenAI CEO Altman’s caution and call for patience. I also agree with the way he put it recently on the Hard Fork podcast: “... I believe this is going to change many – maybe the great majority of – aspects of society…”

Change wrought by AI is coming in spades. Today, however, I want to focus on the backstory. Let’s peer around the corner – not just at OpenAI, but also at this enabling Fourth Surge of data, driven by what I describe as the “Double Helix of Data” — the combinatorial power of Data Discovery and Agile Data Governance that is powered by a knowledge graph.

You can’t understand the 15th century Age of Exploration without understanding the invention of the magnetic compass. You can’t understand the advent of the Industrial Revolution without grasping the role of ball bearings. And you certainly can't fathom the impact of today's computer-centric society without understanding how Texans Jack Kilby and Robert Noyce invented the integrated circuit in 1959.

You can’t understand the Fourth Surge without understanding the knowledge graph.

To steal a phrase from an industry colleague, the data scientist Seth Earley, the scaffolding for building of knowledge from data, datasets, schema and data streams is in the knowledge graph. The knowledge graph is the definition of “is-ness”. This original phrase captures so much. For the knowledge graph is what enables organizations and teams to look at their diverse data assets and ask the question, “What is this?”, and get the answer. Which is that it is all your data assets in relationships related to one another and therefore can be choreographed together, enabling the real meaning of becoming “data-driven.”

The knowledge graph is driving the Fourth Surge as a breakthrough enabling technology for both AI and the “Double Helix of Data”. This Fourth Surge driving the torrent of change with its AI afterburners is in many ways analogous to the genetic revolution, hence my term, the “Double Helix of Data”. The original DNA revolution is really a story of two breakthroughs – first the discovery, then the afterburner.

The "discovery" came in 1953, the code of biology’s DNA double helix cracked by scientists Rosalind Franklin, James Watson, and Francis Crick. The “afterburner” came two decades later; this was the 1973 switch of DNA from one bacteria into another by biochemists Herbert Boyer and Stanley Cohen – what we today called genetic engineering.

In parallel to this work and discovery of DNA, great strides were made in medicine and biology. We created a vaccine for polio. Cardiologists found ways to block blood flows, enabling implantation of a new device called the pacemaker – just the beginning of our merger with machines.

The 1950s were also when we developed nuclear magnetic resonance, the forerunner of the MRIs now routine in every hospital. Biologists made new discoveries in the way proteins and amino acids work, and in the 1960s science revealed much in the anatomy of the brain, including the relationship between the frontal cortex and the limbic system.

I mention these strides in biology and the physical sciences not to burden you with a history of abstract but applied science. Rather, I mention this amazing history because its more familiar arc illustrates the parallel evolution in the digital world, and the comparably profound strides in the management and use of data. Of course, the tools are many, including cloud technologies and machine learning, even blockchain. But the cognition at the center of it all is and will be enabled by data catalogs and knowledge graphs – the enabling technologies, for example, of Facebook, Google, Amazon, and other titans of Big Tech.

Just as the discovery of DNA’s double helix, and subsequently the means to engineer it, revolutionized life sciences, the Double Helix of Data is of similar scope. This equivalent historic scope is the innovation of Data Discovery and Agile Data Governance.

The faster we master and democratize these tools, the faster we’ll master this torrent upon us.

LinkedIn Co-founder and VC extraordinaire Reid Hoffman captured it recently: “we are ‘Homo techne’” – humans as toolmakers and tool users. “The story of humanity is the story of technology,” Hoffman wrote. That story is about to open upon its biggest and most dramatic chapter yet.

In this age of Hoffman’s “Homo techne”, our tools include the data catalog and the knowledge graph, which can be compared to the nervous system and brains of the organism that is enterprise data. I don’t make this analogy lightly. For just as a genetic mutation among Homo sapiens some 50,000 years ago accelerated the development of our nervous system and brains to enable language, grammar, and, in turn, true cognition, these recent digital tools are what convert the growing but disparate silos of data that underlie our age into digital cognition.

But we haven’t stopped there. Our data catalog powered by the knowledge graph is not just the toolset of digital cognition and grammar. No, these are tools specifically for universal grammar. There are other data tools in the marketplace, but most are also used to lock down your data itself, to thwart compatibility with other applications. As my colleague and data.world co-founder and CTO Bryon Jacob wrote recently, “vendor lock-in” is a scourge on our industry – the equivalent by an order of magnitude of blocking your ability to share your iPhone files on a PC or syncing your iPad with a Garmin smartwatch.

Not here at data.world.

It hardly needs to be said that in recent decades, we’ve become very good at creating data. As former Google CEO Eric Schmidt famously calculated back in 2010, all the information we created from the dawn of civilization until 2003 added up to something like five exabytes of data – the equivalent of 1.2 billion hours of high definition video streamed at 4 Mbps. Now, we create that much data every two days, or less. But bigger data haystacks, as wonderful as they might be, don’t make it easier to find the informational needle.

Enter Data Discovery, the suite of tools we have integrated with our data catalog, driven by our knowledge graph, which is all about enabling the aspiring masters of data to precisely identify, target, combine, and make usable their growing oceans of valuable, but siloed, data. This is how enterprises move beyond the phrase “data-driven” to actually become so, and to know and broadly see the full spectrum of the data asset they already have.

But revealing it is only half the challenge, as was the case with that equivalent discovery in 1953 of biology’s double helix. As mentioned, that discovery allowed us to identify inheritable diseases and predict them. It illuminated our origins and ancestors, and it gave us a new understanding of how genetic mutations occurred. And it certainly helped us challenge racial prejudices with science – we are all identical in our makeup regardless of pigmentation.

Our genetic uniformity is a fascinating subject in its own right – the least diversity among virtually all mammals – and is explained by researchers as a result of Homo sapiens population falling at some point to a breeding population of fewer than 10,000. Ponder for a moment the idea that all 7.9 billion of us are descended from a group of folks who could barely fill a medium-sized university.

This is all extraordinary illumination, but the transformative achievement for humanity was when the discovery of DNA was completed in 1973 with the understanding of how to move genes around – the making of the agile double helix. All manner of life-saving and enhancing innovations have ensued since. We use genetically modified E. coli to create synthetic insulin for diabetics, and genetically modified baker’s yeast to produce hepatitis B vaccine. We use genetically modified mammal cells to make blood factor 8 for hemophiliacs and the tissue plasminogen activator – known at tPA – for heart attack victims. Since 2013, scientists have been using the geneticist’s “scissors,” the gene-editing technique CRISPR, to improve cancer treatment, and it may well lead to a cure. The mRNA vaccines that have short circuited the COVID-19 pandemic too are borne along by this research.

The scope and scale of this profound leap in our scientific standing is my analogy to the quantum leap that is yielded by Agile Data Governance when combined with Data Discovery – together forming the “Double Helix of Data.”

Data discovery is the suite of technologies that allows precise locating, mapping, and correlation of data assets. Before the combination of the data catalog powered by a knowledge graph allowed us to do this, data resources were often like the internet before the invention of the first search engine. And we’ve refined these techniques with supporting tools, such as our Eureka Explorer, that leverage the power of the knowledge graph to map the entire life cycle of data – data lineage as we call it. This allows us to see where data came from, where and how it was used, and its relationship to the relevant metadata, without which it is often lost.

Agile Data Governance, meanwhile, is the inversion of rigid hierarchies in data management, creating “trust at scale,” the building of communities around data. Akin to the “scissors” of CRISPR, it is a revolution within a revolution. As I discussed in my earlier essay, this concept, developed by Jon Loyens, my co-founder and our Chief Product Officer, is inspired by the Agile Software Movement of two decades ago. It’s hard to exaggerate the ways the bottom-up ideas profoundly remade the top-down software landscape.

In this account of the transformative power of these technologies, author, data pioneer, and data.world Advisory Board member Theresa Kushner quotes a company auditor on how the approaches of the Agile Software Movement came to be used in data governance: “They are helping us make sense of complex and large datasets and identifying new opportunities to help us improve our decision making. Last month when we thought we had a data breach and we didn’t know where to look, we went to the data analyst community, and it only took 15 minutes to pinpoint the problem and resolve it.”

Header Image: By Natali_Mis

Agile data governance is all about access and empowerment, but also the right level of protection for one of an organization’s most valuable assets: its data. Getting this balance right is challenging but well worth the endeavor. All companies want their team members to have access to the collective braintrust that enables them to get their jobs done much more efficiently and effectively. No company wants their data assets stolen or squandered.

“We are as gods and might as well get good at it,” wrote polymath Stewart Brand in the introduction of the Whole Earth Catalog, upon launch more than half a century ago. I think of this quote often. For his point in 1968 is just as valid in 2023. We are not passengers on Planet Earth. Rather, we are the pilots.

The Fourth Surge is our “star dust”, the ingredients of our fast expanding digital cosmos. Our essential navigation tool – our astrolabe, compass, and GPS – is the Double Helix of Data.

At data.world, we are completely focused on this evolution and believe that knowledge graphs will redefine the entire category of data catalogs over the next few years. AI applications, famous and otherwise, are what we’re seeing debated right now. But we should also be looking behind the curtain and understanding the ultimate afterburners behind this technology – what we call knowledge graphs and the Double Helix of Data: Data Discovery and Agile Data Governance.

Get the best practices, insights, upcoming events & learn about data.world products.