Feb 14, 2018

Shad Reynolds

VP, Engineering

Working with new data can be overwhelming. How do you extract information and knowledge from the facts sitting in front of you? In this article, we’ll explore a new dataset. We’ll ask, then answer, a number of common types of questions. Exercises and the full dataset are available for those who want to dig deeper.

Cast yourself back to a simpler time… when the web was new and filled with possibilities — August, 1995. Personally, I was just settling into my first weeks of university, where for the first time, I had a direct connection to the internet. After years of 10¢/min long-distance dial-up America Online, I was finally free to surf the world-wide-web without restriction. Oh what a time to be alive.

One of the sites I distinctly recall visiting in those first weeks was NASA.gov. They were one of a handful of agencies who embraced the web in those days. Ironically, I recently came across something interesting… a dataset of server logs… from August of ‘95!

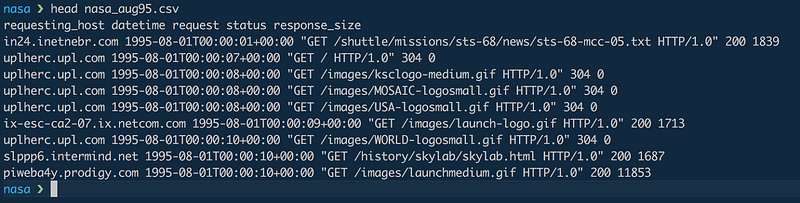

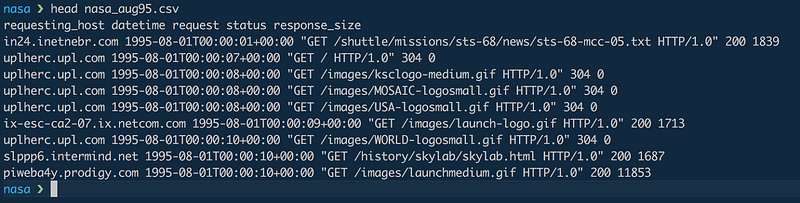

NASA.gov — server logs for August 1995

NASA.gov — server logs for August 1995requsting_hostdatetimerequeststatussizeI’ve uploaded this file to data.world/shad/nasa-website-data for further analysis with SQL.

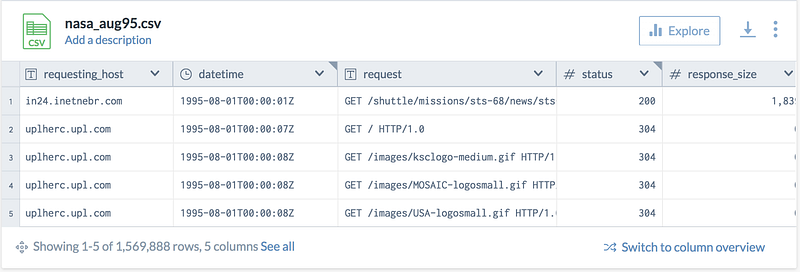

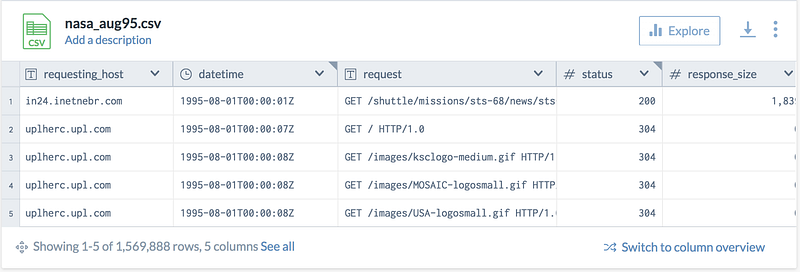

File preview for NASA server logs—Explore file

File preview for NASA server logs—Explore fileNow, let’s consider what sorts of questions we might have asked of this data had we worked with NASA more than 20 years ago.

I was pretty active in the 10pm — 2am timeframe, but perhaps this wasn’t typical. Let’s see…

Requests per hour — View query

Requests per hour — View queryDATE_PART()GROUP BYLooks like midday is the highest traffic time of day, with significantly less traffic during the night. Not entirely surprising I suppose, but interesting nonetheless.

This is nearly the same question as before, only on a different part of the date, namely the day of the week. We can simply adjust our query from before, changing the

DATE_PART()“hour”“dayofweek” Requests per day of week — View Query

Requests per day of week — View QueryNow we have all of our traffic aggregated to the day of the week when the request was made. Day 4 (Thursday) is the largest day for traffic, followed closely by 2 (Tuesday). Weekends were a significantly slower.

Now, let’s think about traffic a bit more generally. Perhaps there was a specific Thursday that was popular for some reason. Let’s dig a bit deeper and look at each week to see how traffic compares.

In this case, we want to see how weeks compare to one another. We could simply sum up the total count of requests, but we have a partial weeks in our data, so we can’t directly compare totals. What we actually need to see is the average number of requests per day for each week of our data (including partial weeks). This allows us to compare weeks and see how our traffic changes over time.

Average requests per day for each week—View query

Average requests per day for each week—View queryHere we introduce a new concept — the

WITHdaysNext, we use the

daysstart_of_weekWe now have a clear picture of when we were receiving traffic during the month, but perhaps we would like to know where the traffic is coming from.

Let’s take a step back from dates and consider the

requesting_host Requests by host—View query

Requests by host—View queryHere again we’ve done a simple aggregation on the host and calculated the number of requests.

But this result has some issues. Many of these hosts appear to be the similar. Hosts such as

piweba3y.prodigy.compiweba5y.prodigy.com Request by host (cleaned up hosts)—View query

Request by host (cleaned up hosts)—View queryThis query introduces the

REPLACE()This regular expression above reads (roughly)…

^ Start at the beginning of the string

( Start group

gw\d+ “gw”, followed by 1+ numeric digits

| OR

www.*proxy “www”, then 0+ chars followed by “proxy”

| OR

piweb[^.]+ “piweb” followed by 1+ chars that are NOT a period

) End Group

\. Followed by a periodIf this regular expression matches some part of the

requesting_hostThe majority of traffic appears to come from

aol.comprodigy.comExercise: Can you improve this regular expression to be more generic?

Get Started

HINT: Search online for “Regex Lookahead”.

Give up? Here is a version that leaves only.host.com

This is a common sort of question that you’ll receive—which page is performing the best.

Most popular pages—View query

Most popular pages—View queryIn this query, we’re filtering out images since we really want to just focus on pages of data. (Images are loaded on multiple pages, so pollute the data quite a bit). We’re also stripping off

HTTP/1.0From this, it’s pretty clear that

nasa.gov/ksc.htmlnasa.gov/ Most popular page for each day—View query

Most popular page for each day—View queryHere we’re first calculating the number of requests going to each page on each day. We’re then aggregating to the day level and using some advanced functions to select just the bits of data we want.

MAX()MAX_BY()As you can see, on days where it was popular, it was very popular.

Exercise: Find out how many page views

has each day of the month to see if there’s a pattern. Get Started/ksc.html

Next, I’m interested in that

size Total data consumed by each requesting host—View query

Total data consumed by each requesting host—View queryHere we’re introducing a new aggregation function,

SUM()Perhaps unsurprisingly, these domains are similar to the list from before — more requests means more bytes downloaded. What we should probably do is calculate the average number of bytes per domain instead of the total. I will leave this as an exercise for the reader.

Exercise: Which domain requested the largest average amount of data per day over the course of the month? Get Started

Exercise: What was the largest file downloaded by each domain during the month? Get Started

Exercise: Read up on Aggregations and Aggregation Functions and see what else you can find in this data!

I started this journey in a dorm room back in August of ’95. Seems only fitting that we ask “Are my requests captured here?”

Requests from Virginia Tech—View query

Requests from Virginia Tech—View queryLooks like there was about 13mb of data downloaded to servers at

vt.eduExercise: Did alum from your alma mater hit NASA.gov in August ‘95? Replace “vt.edu” with the domain of your choice and find out! Get Started

Obviously, there probably isn’t much value in digging through 20 year old server logs. There is value, however, in understanding the tools and methods for aggregating and filtering data. My hope is that you’re now prepared to take these examples and apply them to your own questions. Whether you’re dealing with time series requests, analytics data, or financial transactions, these same methods can be applied to your data.

Want to dig into NASA server logs a bit more? The full dataset is available on data.world. Feel free to explore and query this data as much as you want. Don’t worry, you won’t break it!

Have your own data that you’d like to explore? Create an account on data.world, create a new dataset, and test out your SQL chops. It’s quick, easy and secure.

Learn more in our SQL Docs, or check out our webinar where we discuss some of these topics in more detail.

Working with new data can be overwhelming. How do you extract information and knowledge from the facts sitting in front of you? In this article, we’ll explore a new dataset. We’ll ask, then answer, a number of common types of questions. Exercises and the full dataset are available for those who want to dig deeper.

Cast yourself back to a simpler time… when the web was new and filled with possibilities — August, 1995. Personally, I was just settling into my first weeks of university, where for the first time, I had a direct connection to the internet. After years of 10¢/min long-distance dial-up America Online, I was finally free to surf the world-wide-web without restriction. Oh what a time to be alive.

One of the sites I distinctly recall visiting in those first weeks was NASA.gov. They were one of a handful of agencies who embraced the web in those days. Ironically, I recently came across something interesting… a dataset of server logs… from August of ‘95!

NASA.gov — server logs for August 1995

NASA.gov — server logs for August 1995requsting_hostdatetimerequeststatussizeI’ve uploaded this file to data.world/shad/nasa-website-data for further analysis with SQL.

File preview for NASA server logs—Explore file

File preview for NASA server logs—Explore fileNow, let’s consider what sorts of questions we might have asked of this data had we worked with NASA more than 20 years ago.

I was pretty active in the 10pm — 2am timeframe, but perhaps this wasn’t typical. Let’s see…

Requests per hour — View query

Requests per hour — View queryDATE_PART()GROUP BYLooks like midday is the highest traffic time of day, with significantly less traffic during the night. Not entirely surprising I suppose, but interesting nonetheless.

This is nearly the same question as before, only on a different part of the date, namely the day of the week. We can simply adjust our query from before, changing the

DATE_PART()“hour”“dayofweek” Requests per day of week — View Query

Requests per day of week — View QueryNow we have all of our traffic aggregated to the day of the week when the request was made. Day 4 (Thursday) is the largest day for traffic, followed closely by 2 (Tuesday). Weekends were a significantly slower.

Now, let’s think about traffic a bit more generally. Perhaps there was a specific Thursday that was popular for some reason. Let’s dig a bit deeper and look at each week to see how traffic compares.

In this case, we want to see how weeks compare to one another. We could simply sum up the total count of requests, but we have a partial weeks in our data, so we can’t directly compare totals. What we actually need to see is the average number of requests per day for each week of our data (including partial weeks). This allows us to compare weeks and see how our traffic changes over time.

Average requests per day for each week—View query

Average requests per day for each week—View queryHere we introduce a new concept — the

WITHdaysNext, we use the

daysstart_of_weekWe now have a clear picture of when we were receiving traffic during the month, but perhaps we would like to know where the traffic is coming from.

Let’s take a step back from dates and consider the

requesting_host Requests by host—View query

Requests by host—View queryHere again we’ve done a simple aggregation on the host and calculated the number of requests.

But this result has some issues. Many of these hosts appear to be the similar. Hosts such as

piweba3y.prodigy.compiweba5y.prodigy.com Request by host (cleaned up hosts)—View query

Request by host (cleaned up hosts)—View queryThis query introduces the

REPLACE()This regular expression above reads (roughly)…

^ Start at the beginning of the string

( Start group

gw\d+ “gw”, followed by 1+ numeric digits

| OR

www.*proxy “www”, then 0+ chars followed by “proxy”

| OR

piweb[^.]+ “piweb” followed by 1+ chars that are NOT a period

) End Group

\. Followed by a periodIf this regular expression matches some part of the

requesting_hostThe majority of traffic appears to come from

aol.comprodigy.comExercise: Can you improve this regular expression to be more generic?

Get Started

HINT: Search online for “Regex Lookahead”.

Give up? Here is a version that leaves only.host.com

This is a common sort of question that you’ll receive—which page is performing the best.

Most popular pages—View query

Most popular pages—View queryIn this query, we’re filtering out images since we really want to just focus on pages of data. (Images are loaded on multiple pages, so pollute the data quite a bit). We’re also stripping off

HTTP/1.0From this, it’s pretty clear that

nasa.gov/ksc.htmlnasa.gov/ Most popular page for each day—View query

Most popular page for each day—View queryHere we’re first calculating the number of requests going to each page on each day. We’re then aggregating to the day level and using some advanced functions to select just the bits of data we want.

MAX()MAX_BY()As you can see, on days where it was popular, it was very popular.

Exercise: Find out how many page views

has each day of the month to see if there’s a pattern. Get Started/ksc.html

Next, I’m interested in that

size Total data consumed by each requesting host—View query

Total data consumed by each requesting host—View queryHere we’re introducing a new aggregation function,

SUM()Perhaps unsurprisingly, these domains are similar to the list from before — more requests means more bytes downloaded. What we should probably do is calculate the average number of bytes per domain instead of the total. I will leave this as an exercise for the reader.

Exercise: Which domain requested the largest average amount of data per day over the course of the month? Get Started

Exercise: What was the largest file downloaded by each domain during the month? Get Started

Exercise: Read up on Aggregations and Aggregation Functions and see what else you can find in this data!

I started this journey in a dorm room back in August of ’95. Seems only fitting that we ask “Are my requests captured here?”

Requests from Virginia Tech—View query

Requests from Virginia Tech—View queryLooks like there was about 13mb of data downloaded to servers at

vt.eduExercise: Did alum from your alma mater hit NASA.gov in August ‘95? Replace “vt.edu” with the domain of your choice and find out! Get Started

Obviously, there probably isn’t much value in digging through 20 year old server logs. There is value, however, in understanding the tools and methods for aggregating and filtering data. My hope is that you’re now prepared to take these examples and apply them to your own questions. Whether you’re dealing with time series requests, analytics data, or financial transactions, these same methods can be applied to your data.

Want to dig into NASA server logs a bit more? The full dataset is available on data.world. Feel free to explore and query this data as much as you want. Don’t worry, you won’t break it!

Have your own data that you’d like to explore? Create an account on data.world, create a new dataset, and test out your SQL chops. It’s quick, easy and secure.

Learn more in our SQL Docs, or check out our webinar where we discuss some of these topics in more detail.