Jan 08, 2024

Bryon Jacob

CTO & Co-Founder

Large Language Models (LLMs) are not to be trusted. Period.

This might seem to be a surprising thing to say, given their advanced AI capabilities and the obvious general knowledge of today's powerful foundation models. However, the crux of the matter is that LLMs cannot explain their outputs. You can't trust a result from an LLM, not because it's inaccurate, but because it's inscrutable. There's no way to audit the answer or check its work.

It's akin to accepting a person's word without the ability to verify their claims; a non-starter for critical business decisions. Healthy relationships are built on trust. An enterprise’s relationship to its ML models are no different. So how do you overcome your trust issues?

Learn how your organization can build AI-powered applications that generate accurate, explainable, and governed responses.

LLMs operate in a realm of statistical probabilities, without deterministic boundaries. They generate solutions without referencing any predefined database or “truth source.” Therefore, it’s impossible to cross-check LLM outputs or demand they justify their answers. That would be like trying to inspect the contents of a person's brain - you can't.

The ingredients of the recipe used to generate each LLM response cannot be traced or deconstructed; the recipe is inherently a black box. But that is not a reason to despair.

The inherent mistrust built into LLM response context doesn't mean that organizations should forsake them. LLMs are as powerful as you've heard; you only need to employ them properly.

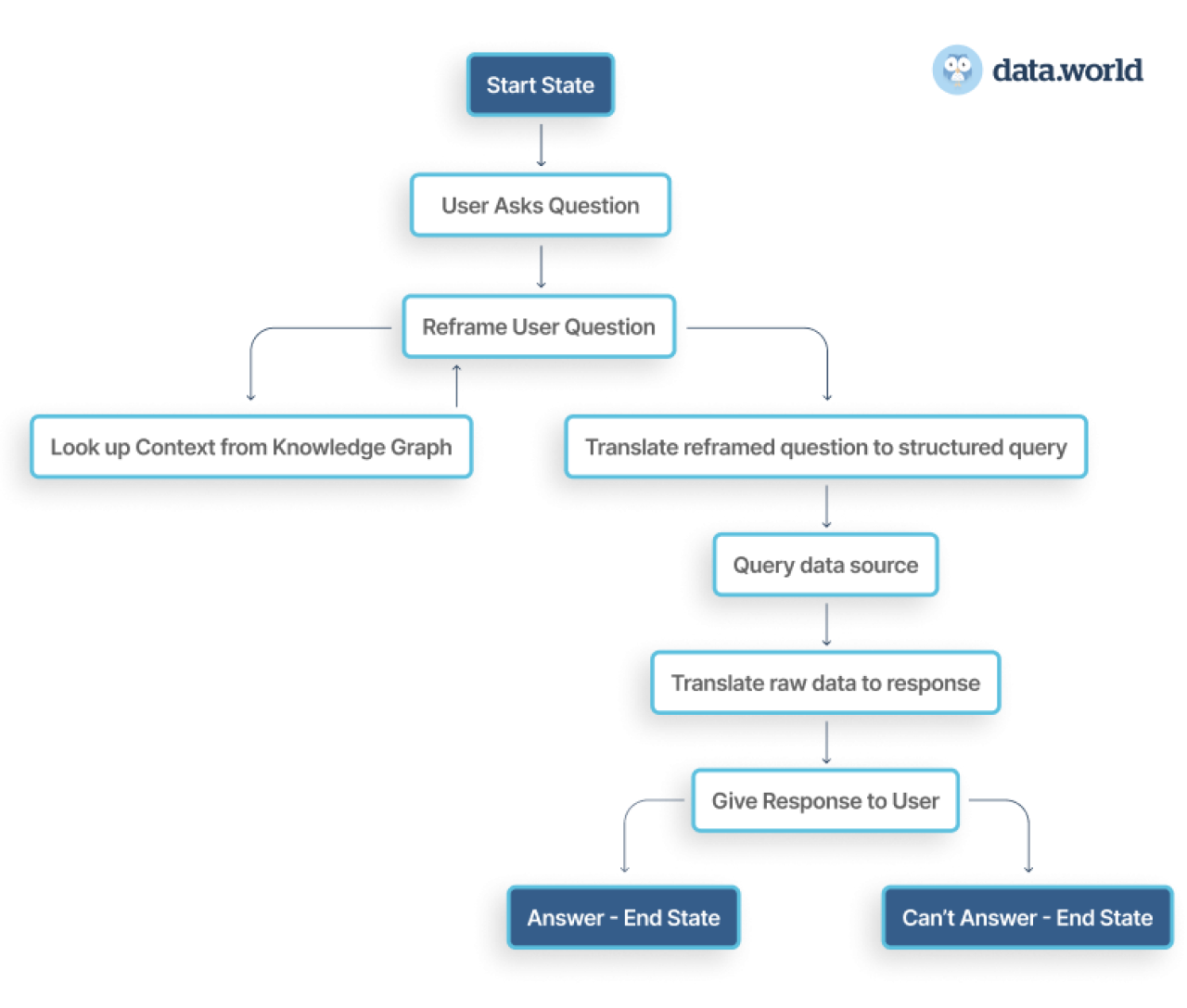

They can be instrumental as a component in autonomous intelligent agents designed to answer enterprise questions, if utilized correctly. The trick is to use LLMs to reframe natural language questions into structured queries. When these queries are executed against governed and auditable data sources, they yield accurate - and most importantly, provably accurate - answers.

Say you are a People Ops manager at your company. You can’t ask ChatGPT, “Which employees are subject to our salary band policy?”

ChatGPT won’t know how to answer that; it doesn’t know how many employees you have, what your salary band policy is, and what other parameters it should be considering. That information is disparate within your organization’s data stores; and some of it is private and not available to ChatGPT.

Sure, you can work to train ChatGPT on your database; feed it information about your salary band policy, plus employee rosters, etc. However, you can’t verify that its responses are accurate; they’re just a best guess.

Now, reframe your approach. Use an autonomous agent architecture like the above to reframe your query process.

You ask the agent, “Which employees are subject to our salary band policy?” The agent can look up your policy in the knowledge graph, and understand how the policy is defined - let's say for this example it is "Every employee whose salary is above 95% of the max for their salary band must be reviewed on an annual basis". It can use that knowledge to reframe the question as "Which employees have a salary that is greater than 95% of the max for their salary band?" That question is one that can be turned into a structured query. The agent can run that query and get back an answer - It has an answer to the question that contains a path of complete and holistic context to “show the work” and arrive at a factual, data-backed response. You, the end user, can verify that it looked up the right policy, interpreted it correctly, and ran the correct query.

The autonomous agent approach marries the power of AI question-answering with robust explainability and auditability. Companies can thus depend on these smart agents for decision-making, secure in the knowledge that their AI-powered insights are rule-governed and trustworthy.

Despite the significant benefits LLMs offer in managing vast data and complex queries, their use must be governed strictly to ensure transparency, verifiability, and trust. Ultimately, it's not the LLM alone, but the autonomous agent coupled with a knowledge graph and a robust governance framework that can serve as a reliable AI solution for enterprises.

LLMs are neither magical boxes that you can trust implicitly, nor are they a Pandora's box of chaos. With the right approach and stringent governance, they can be harnessed effectively.

By reframing natural language questions into structured queries, we can ensure accuracy, explainability, and governance in AI-powered enterprise solutions. It's not about discarding the box, but about learning how to complement it wisely.

Learn how your organization can build AI-powered applications that generate accurate, explainable, and governed responses.

Large Language Models (LLMs) are not to be trusted. Period.

This might seem to be a surprising thing to say, given their advanced AI capabilities and the obvious general knowledge of today's powerful foundation models. However, the crux of the matter is that LLMs cannot explain their outputs. You can't trust a result from an LLM, not because it's inaccurate, but because it's inscrutable. There's no way to audit the answer or check its work.

It's akin to accepting a person's word without the ability to verify their claims; a non-starter for critical business decisions. Healthy relationships are built on trust. An enterprise’s relationship to its ML models are no different. So how do you overcome your trust issues?

Learn how your organization can build AI-powered applications that generate accurate, explainable, and governed responses.

LLMs operate in a realm of statistical probabilities, without deterministic boundaries. They generate solutions without referencing any predefined database or “truth source.” Therefore, it’s impossible to cross-check LLM outputs or demand they justify their answers. That would be like trying to inspect the contents of a person's brain - you can't.

The ingredients of the recipe used to generate each LLM response cannot be traced or deconstructed; the recipe is inherently a black box. But that is not a reason to despair.

The inherent mistrust built into LLM response context doesn't mean that organizations should forsake them. LLMs are as powerful as you've heard; you only need to employ them properly.

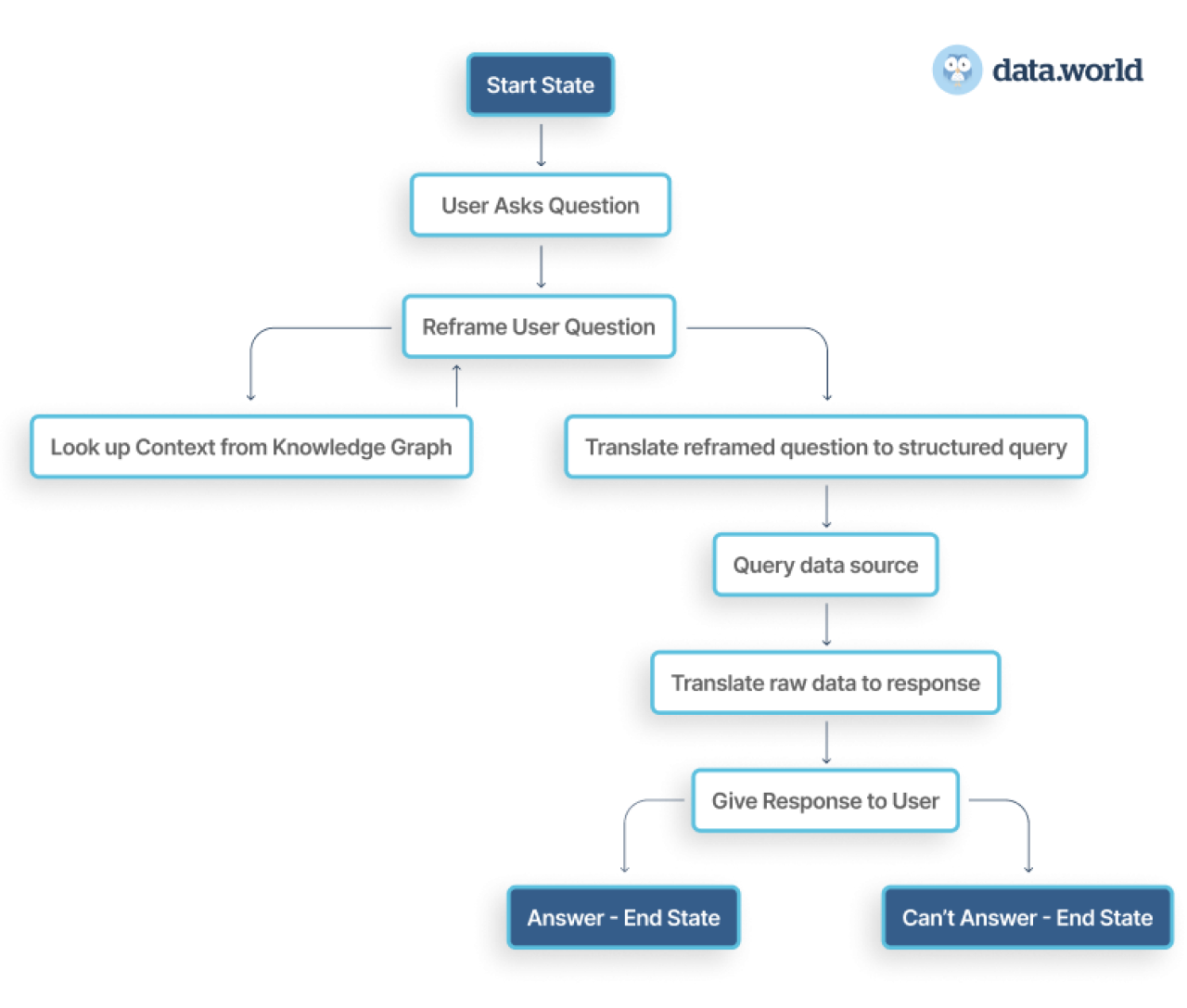

They can be instrumental as a component in autonomous intelligent agents designed to answer enterprise questions, if utilized correctly. The trick is to use LLMs to reframe natural language questions into structured queries. When these queries are executed against governed and auditable data sources, they yield accurate - and most importantly, provably accurate - answers.

Say you are a People Ops manager at your company. You can’t ask ChatGPT, “Which employees are subject to our salary band policy?”

ChatGPT won’t know how to answer that; it doesn’t know how many employees you have, what your salary band policy is, and what other parameters it should be considering. That information is disparate within your organization’s data stores; and some of it is private and not available to ChatGPT.

Sure, you can work to train ChatGPT on your database; feed it information about your salary band policy, plus employee rosters, etc. However, you can’t verify that its responses are accurate; they’re just a best guess.

Now, reframe your approach. Use an autonomous agent architecture like the above to reframe your query process.

You ask the agent, “Which employees are subject to our salary band policy?” The agent can look up your policy in the knowledge graph, and understand how the policy is defined - let's say for this example it is "Every employee whose salary is above 95% of the max for their salary band must be reviewed on an annual basis". It can use that knowledge to reframe the question as "Which employees have a salary that is greater than 95% of the max for their salary band?" That question is one that can be turned into a structured query. The agent can run that query and get back an answer - It has an answer to the question that contains a path of complete and holistic context to “show the work” and arrive at a factual, data-backed response. You, the end user, can verify that it looked up the right policy, interpreted it correctly, and ran the correct query.

The autonomous agent approach marries the power of AI question-answering with robust explainability and auditability. Companies can thus depend on these smart agents for decision-making, secure in the knowledge that their AI-powered insights are rule-governed and trustworthy.

Despite the significant benefits LLMs offer in managing vast data and complex queries, their use must be governed strictly to ensure transparency, verifiability, and trust. Ultimately, it's not the LLM alone, but the autonomous agent coupled with a knowledge graph and a robust governance framework that can serve as a reliable AI solution for enterprises.

LLMs are neither magical boxes that you can trust implicitly, nor are they a Pandora's box of chaos. With the right approach and stringent governance, they can be harnessed effectively.

By reframing natural language questions into structured queries, we can ensure accuracy, explainability, and governance in AI-powered enterprise solutions. It's not about discarding the box, but about learning how to complement it wisely.

Learn how your organization can build AI-powered applications that generate accurate, explainable, and governed responses.